Let Me Ask You This: How Can a Voice Assistant Elicit Explicit User Feedback?

Quality user feedback is important for making good music recommendations.

Recommending the right music to a user at the right time is a hard challenge, as recommender systems need to closely understand what a given user wants in a given context while keeping user privacy top of mind. Pragmatically, researchers and engineers rely on user feedback, such as users’ clicks, skips, or comments, to build quality machine learning models to improve the user experience. Although recommender systems leverage many different kinds of user feedback to optimize recommendations, a lot of this feedback can be quite noisy or ambiguous. For example, when a user skips a song that the system believes to be a perfect fit, how should the system interpret this skip? Knowing if the recommendation actually wasn’t a great fit or if the user was simply in an unexpected context can have a big impact on future recommendations.

Could voice assistants help improve music recommendations for listeners?

Voice assistant interactions present a particular challenge, as they often involve vague user requests like “play music” or “play something” [1]. At the same time, voice assistants might offer a unique opportunity to address this challenge. During conversational interactions themselves, voice assistants could provide users with options to give better, more direct in-situ feedback, which contains less noise than indirect user signals [2, 3, 4]. For example, when a recommender system finds a ‘skip’ to be ambiguous, the voice assistant could simply ask the user for the reason behind it.

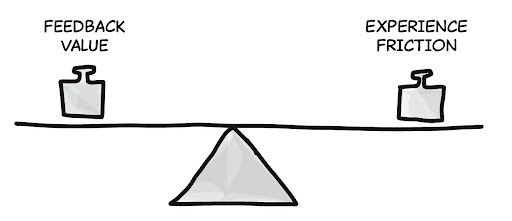

Though such interactions would be valuable for improving the underlying systems, it is likely that they could be quite annoying and intrusive for the user. To better understand this dynamic, we explored design opportunities for how voice assistants could empower users to leave better feedback without getting in the way of enjoyable interactions.

What types of explicit user feedback are valuable to the people who build recommender systems?

To learn when in-situ feedback is most valuable, we started our research by interviewing machine learning experts at Spotify who build and evaluate ML models on a daily basis. This allowed us to identify four categories of explicit user feedback that are most important to creating a better user experience.

Clarifying User Input

When the user issues a query to the system but the query is too ambiguous for the system to make a high-confidence decision, the voice assistant can prompt the user for clarification.

Clarifying Behavioral Signals

When a user’s signals — such as duration of user engagement, skipping content, or completing content to the end — contradict each other, the voice assistant can step in and collect the user's real intention.

Collecting Feature Feedback

When introducing a new feature, it is often initially unclear what signals are most meaningful or reliable. The voice assistant can directly ask for user feedback on the new feature.

Understanding User Context

The voice assistant can ask about the user’s context, which could be used to improve user models and recommender systems.

What design choices could reduce friction in the user experience?

In the second part of our research, we explored how this might look from the user’s perspective. We looked at three design dimensions of a voice assistant: (1) the framing of the assistant, (2) its elicitation strategy when asking for feedback, and (3) the level of proactivity. Previous studies have shown that those three dimensions play important roles in human-agent interaction and information elicitation [5,6,7].

To evaluate various voice assistant designs, we conducted an online experiment. We generated realistic usage scenarios based on the four categories we derived from our ML practitioner interviews, then we created variations of those scenarios for the three design dimensions outlined above.

For framing the assistant, we prompted each user to view the assistant in one of three ways:

Assistant (“Imagine you have a voice assistant that helps you with your daily tasks.”)

Learner (“Imagine you have a voice assistant that’s always learning how to better help you with your daily tasks.”)

Collaborator (“Imagine you have a voice assistant that collaboratively works with you on your daily tasks”)

These framings signaled different levels of agent competency and common human-agent relationships.

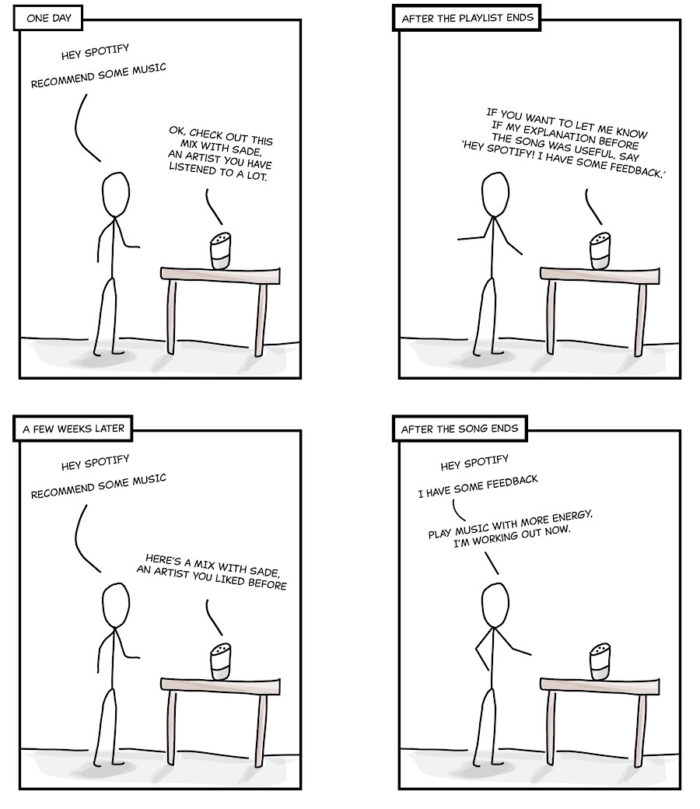

For elicitation strategy, we considered two ways the assistant could get feedback from the user: (1) asking the user for feedback through a direct question, or (2) instructing the user on how to give feedback. For level of proactivity, we looked at how closely the assistant’s prompts were linked to the user's input. In some scenarios the assistant’s prompt was in direct response to the user input that preceded it, while in other scenarios the prompts were not connected to a specific user interaction.

What we learned:

People care that the assistant learns and works with them.

In our study, people were more willing to respond to an assistant introduced as a learning or collaborating voice assistant. Prompts for feedback in these conditions were also perceived as less disruptive.

The results may be explained by the well-known CASA paradigm that describes how people respond to conversational assistants as though they are social entities. When we framed the assistant as a Learner, it signaled limited capabilities but also an agency for improvement. Just like when people are more tolerant to a learning “student’s” shortcomings and more motivated to provide feedback, in our study it might have made participants more willing to respond to the assistant. Signaling the agency to improve might have made our participants feel that their feedback will ultimately improve their own experience and thus pay off in the long run. Our Collaborator framing emphasized the shared goal of creating a better user experience for the user. This is known to increase users’ motivation to collaborate with an agent’s requests [8]. So the communication of the shared goal might have led to the higher willingness to respond that we observed in our participants.

People prefer to be instructed on how to give feedback rather than be asked for it directly.

Our results show that instead of being asked for feedback directly by the assistant, people prefer receiving instructions on how to give feedback. Instructions were perceived as less disruptive than direct questions, without a negative impact on the willingness to respond.

People may feel less put-upon when getting instructions, appreciating the choice to opt in or ignore the prompt. Though one may presume users would not give feedback unless directly asked for it, we did not observe a significant difference in users’ willingness to give feedback in our results. Therefore, providing instructions may elicit a similar amount of user feedback while avoiding unnecessary disruptiveness.

Moving to the future:

Mediating collaborations in recommender systems

Building a recommender system could be described as a collaborative process between the different stakeholders who build these systems (e.g., machine learning engineers, designers, researchers) and millions of end-users.

In our study, we found people are open and interested in responding to a voice assistant’s feedback requests—and perceive the interaction as less disruptive—when the assistant can show that its goals are to improve for the user’s benefit. In our study we only communicated this framing to the user through an introductory sentence, but in practice this should be conveyed throughout all feedback interactions. User researchers and designers could work together with the people who build the recommender systems to design interactions that can help elicit feedback in a more enjoyable way, and to report back to the user how their feedback will, or already has, impacted their experience. This would not only give the user more incentive to respond with high-quality feedback, it would allow them to create a better mental model of how the underlying systems work.

Designing for a long-term and evolving relationship with an assistant

An important consideration for the design of voice assistants is that a user’s experience is not shaped by a single interaction [9], and user expectations for the voice assistant change over time. This evolving relationship provides opportunities for the elicitation strategy to adapt over time, too: as the user becomes more familiar with the ways the voice assistant asks for feedback, the assistant’s prompts could be adjusted. In the beginning the user might need more verbose, detailed instructions for how to provide feedback, then over time the instructions could become shorter. At some point it might just become a user expectation that they can give feedback to improve the assistant’s capabilities.

As voice assistants become more reliable and intuitive through the improvement of the underlying technologies, user attitudes towards their capabilities will likely improve. We found in our study that the more positive the pre-existing attitude a user had toward the voice assistant, the more willing the user was to answer a voice assistant’s prompt, while also perceiving the disruption to be lower. This suggests that in the future, with careful design of when and how to offer options for feedback, users might enjoy giving voice assistants feedback and consider these options as a core part of the voice user experience.

References

[1] Thom, T., Nazarian, A., Brillman, R., Cramer, H. & Mennicken, S. (2020). Play Music: User Motivations and Expectations for Non-Specific Voice Queries. In Proceedings of the 21th International Society for Music Information Retrieval Conference, ISMIR 2020, Montreal, Canada, October 11-16, 2020 (pp. 654–661).

[2] Jawaheer, G., Szomszor, M., & Kostkova, P. (2010). Comparison of implicit and explicit feedback from an online music recommendation service. In proceedings of the 1st international workshop on information heterogeneity and fusion in recommender systems (pp. 47–51).

[3] Amatriain, X., Pujol, J., & Oliver, N. (2009). I like it... i like it not: Evaluating user ratings noise in recommender systems. In International Conference on User Modeling, Adaptation, and Personalization (pp. 247–258).

[4] Joachims, T., Granka, L., Pan, B., Hembrooke, H., & Gay, G. (2017). Accurately interpreting clickthrough data as implicit feedback. In ACM SIGIR Forum (pp. 4–11).

[5] Khadpe, P., Krishna, R., Fei-Fei, L., Hancock, J. T., & Bernstein, M. S. (2020). Conceptual metaphors impact perceptions of human-AI collaboration. Proceedings of the ACM on Human-Computer Interaction, 4(CSCW2), 1-26.

[6] Kim, A., Park, J.M., & Lee, U. (2020). Interruptibility for In-vehicle Multitasking: Influence of Voice Task Demands and Adaptive Behaviors. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 4(1), 1–22.

[7] Xiao, Z., Zhou, M., Liao, Q., Mark, G., Chi, C., Chen, W., & Yang, H. (2020). Tell Me About Yourself: Using an AI-Powered Chatbot to Conduct Conversational Surveys with Open-ended Questions. ACM Transactions on Computer-Human Interaction (TOCHI), 27(3), 1–37.

[8] Nass, C., Fogg, B., & Moon, Y. (1996). Can computers be teammates?. International Journal of Human-Computer Studies, 45(6), 669–678.

[9] Bentley, F., Luvogt, C., Silverman, M., Wirasinghe, R., White, B., & Lottridge, D. (2018). Understanding the Long-Term Use of Smart Speaker Assistants. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol., 2(3).